Nvidia is pushing deeper into open source AI, and this time it is doing it with both code and capital.

On Monday, the chipmaker confirmed it has acquired SchedMD, the company behind Slurm, one of the most widely used open source workload management systems in high-performance computing. At the same time, Nvidia unveiled a new set of open AI models designed to power the next generation of AI agents.

Together, the moves signal a clear strategy. Nvidia is not just building hardware for AI. It wants to control the open infrastructure that runs on top of it.

Slurm plays a quiet but essential role across research labs, data centers, and AI clusters worldwide. It helps schedule and manage workloads across thousands of GPUs and CPUs. That makes it critical for training large models and running complex AI systems at scale.

Nvidia said Slurm will remain open source and vendor-neutral, even after the acquisition. The company stressed that SchedMD will continue operating the software independently, with ongoing support for the broader ecosystem.

That assurance matters. Slurm has long been trusted because it does not lock users into a single vendor. Any hint of proprietary control could have triggered backlash from the research and enterprise communities that rely on it.

SchedMD was founded in 2010 by Morris Jette and Danny Auble, the original developers behind Slurm. Auble currently serves as CEO. Slurm itself dates back to 2002 and has become a backbone technology for modern high-performance computing.

Financial terms of the deal were not disclosed. Nvidia declined to comment beyond its official announcement.

Still, the intent was clear. Nvidia described Slurm as critical infrastructure for generative AI and said it plans to keep investing in the technology to expand its reach across different systems and environments.

This acquisition also formalizes a long-standing relationship. Nvidia has worked closely with SchedMD for more than a decade, optimizing Slurm for GPU-heavy workloads as AI models grew larger and more demanding.

While the Slurm deal strengthens Nvidia’s grip on AI infrastructure, the company also made news on the model side.

On the same day, Nvidia introduced Nemotron 3, a new family of open AI models built for agentic systems. Nvidia claims the models are the most efficient open models available for building accurate, scalable AI agents.

Efficiency is the key message here. As companies race to deploy AI agents across workflows, compute costs and response speed have become major constraints.

Nemotron 3 is designed to address that gap.

The model family includes three variants. Nemotron 3 Nano targets lightweight, focused tasks where speed and low resource usage matter most. Nemotron 3 Super is built for multi-agent environments, where different AI agents need to collaborate and reason together. Nemotron 3 Ultra handles more complex, high-stakes workloads that require deeper reasoning.

Nvidia positioned the release as part of a broader commitment to open innovation.

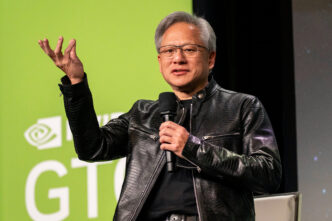

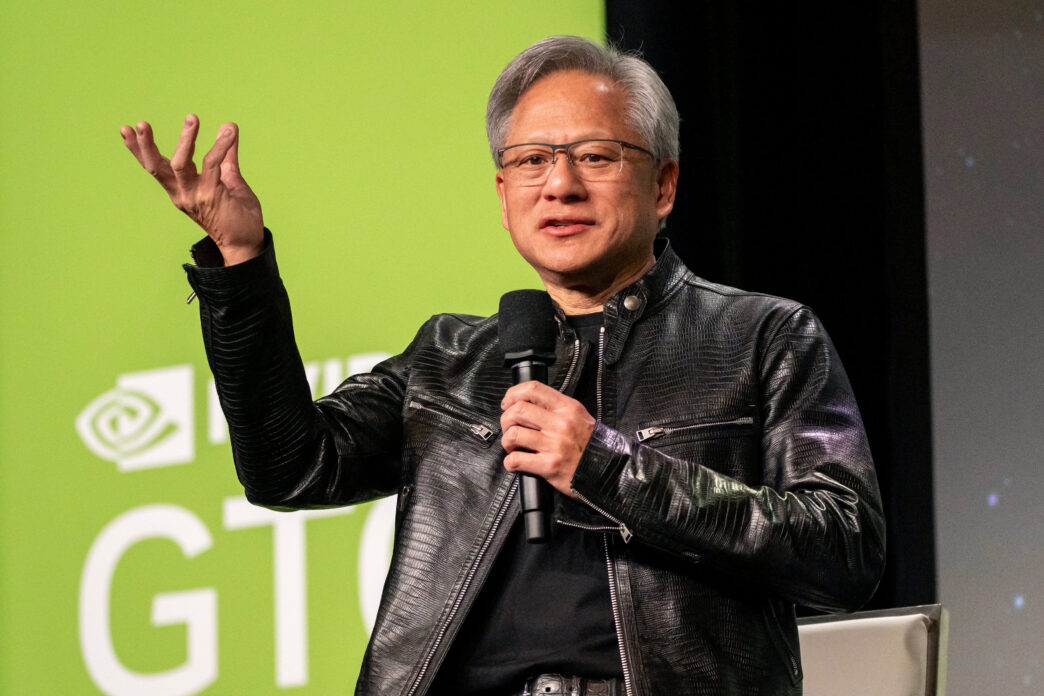

In its announcement, CEO Jensen Huang said open platforms are the foundation of AI progress and described Nemotron as a way to give developers more transparency and control when building agentic systems at scale.

That language reflects a noticeable shift in Nvidia’s messaging.

For years, Nvidia was known primarily as a hardware company. Now, it is openly positioning itself as an open AI platform provider, competing not just with chipmakers but with model developers and cloud platforms.

The Nemotron release follows a string of similar moves.

Just last week, Nvidia released Alpamayo-R1, an open reasoning vision-language model designed for autonomous driving research. At the same time, the company expanded documentation, workflows, and guides around its Cosmos world models, which are open source under permissive licenses.

Those models help developers simulate real-world environments, a critical capability for robotics and physical AI systems.

Taken together, the pattern is hard to miss.

Nvidia is betting that physical AI will define the next wave of growth. That includes robots, autonomous vehicles, industrial machines, and other systems that interact with the real world.

These applications demand more than just large language models. They need simulation, vision, reasoning, and real-time decision-making, all tightly integrated with specialized hardware.

By owning key layers of open infrastructure like Slurm and releasing open AI models optimized for its GPUs, Nvidia increases its influence across the entire AI stack.

It also creates a powerful feedback loop. Developers build on Nvidia’s open tools. Those tools run best on Nvidia hardware. More demand flows back to Nvidia’s chips.

Importantly, Nvidia is trying to do this without alienating the open source community.

Keeping Slurm vendor-neutral and releasing models under open licenses helps the company avoid the perception of locking developers in. Instead, Nvidia presents itself as an enabler, even as it deepens its strategic control.

That balance will be tested over time.

For now, Nvidia’s open source push appears to be gaining momentum. As AI workloads grow more complex and physical AI moves closer to deployment, the demand for scalable, efficient, and open infrastructure will only increase.

Nvidia is making sure it is already there when that demand explodes.